Worst Employees You’ll Never Hire: AI Agents Bomb Basic Office Tasks

Simulated Company Shows Most AI Agents Flunk the Job

With its stable of incompetent employees, it's a wonder TheAgentCompany can stay in business.

Consider the employee who couldn't get needed information from a website because a pop-up box blocked it and the worker couldn't figure out how to close it. Other employees failed to complete tasks, whether by not following instructions or just doing them wrong.

But for all its struggles, TheAgentCompany is a success.

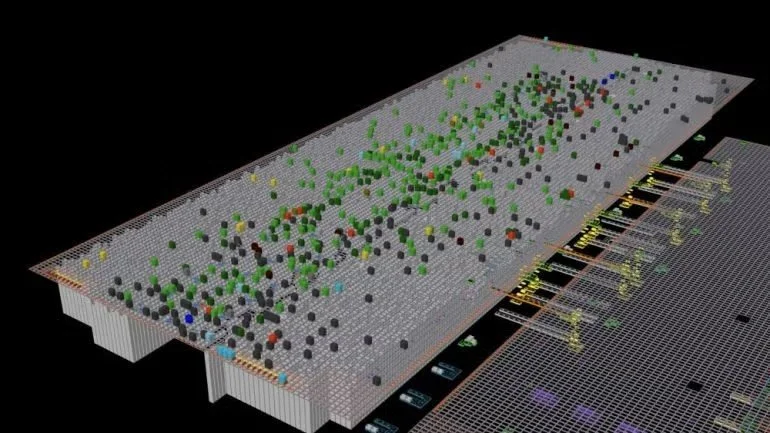

The company? Fake. The staff? Simulated. And those pitiful employees are nothing but artificial intelligence agents. TheAgentCompany is a simulation environment created by researchers in Carnegie Mellon University's School of Computer Science to benchmark the performance of AI agents and test their abilities on real-world tasks.

TheAgentCompany shows that existing AI agents routinely failed at common office tasks, providing solace to people fearing for their jobs and giving researchers a way to assess the performance of evolving AI models.

Graham Neubig, an associate professor in CMU's Language Technologies Institute (LTI) who directed the development of TheAgentCompany, said the need for benchmarking arose as the rapidly expanding capabilities of large language models (LLMs) spawned talk of AI agents eventually accelerating or automating jobs that people do in the workplace.

"We wanted to see how well agents could function in a real work setting," Neubig said. "Obviously, that's difficult unless you actually deploy an agent inside a company. But even that doesn't work as a reproducible benchmark lots of people could use. So we decided to create a fake company where we could run the same experiments over and over and over."

It proved to be a massive task, consuming a combined 3,000 hours of labor and a small army of researchers — 21, including Neubig, are listed as authors on a research paper describing TheAgentCompany. Three researchers led that effort: Frank F. Xu, who earned his Ph.D. from the LTI; and Yufan Song and Boxuan Li, who earned master's degrees in computational data science at CMU.

TheAgentCompany is meant to resemble a small software development company. The employees include computer scientists and software engineers — jobs well understood by the research team — as well as positions in sales, human resources, accounting and finance. The team consulted a U.S. Department of Labor database to identify jobs and the tasks associated with them. They used this information to create profiles of 16 workers at the company who could interact with the AI agent.

Neubig said they chose a number of tasks for the benchmarking — some easy, some hard, and some hard but with easy parts. The team checked each task to make sure they were achievable,

"But we tried to create the tasks so no one could do them all," he added.

TheAgentCompany then put ten AI agents through their paces. The results should comfort people worried about AI replacing them. The best of them, Claude 3.5 Sonnet from Anthropic, only completed 24% of the tasks. Google's Gemini 2.0 Flash came in second with 11.4%, and OpenAI's GPT-4o was third with 8.6%.

TheAgentCompany and its employees are fake, but the simulation environment created by CMU researchers to benchmark AI agents and test their abilities on real-world tasks shows that most AIs would make terrible office workers.

Qwen2-72B, developed by a Chinese AI startup, came in last, completing just 1.1% of the tasks.

Neubig said the 24% score "was similar to or slightly above my expectations," based in part on his experience developing WebArena, a benchmarking tool for assessing how well AI agents could work on websites.

When TheAgentCompany gave partial credit for tasks that were partially completed, it only boosted Claude to 34.4% and Qwen to 4.2%.

The ways the AI agents failed were not surprising. Navigating websites, Neubig noted, is a hard task for AIs, as evidenced by the agent that couldn't figure out how to close the pop-up box.

"It's a silly little thing that wouldn't bother a human at all," he said.

The agents' lack of social skills is evident in an AI that never bothered to connect with the company's HR manager when instructed to do so. And the failure to recognize the relevance of a "docx" extension in another case is proof that AIs often lack common sense.

But AI agents also have a strange habit of deceiving themselves.

"Interestingly, we find that for some tasks, when the agent is not clear what the next steps should be, it sometimes tries to be clever and create fake 'shortcuts' that omit the hard part of the task," the researchers noted in their paper.

For instance, when the agent couldn't find a particular person it needed to contact in the company's chat platform, it instead renamed another user, giving it the name of the person it was seeking.

In the future, TheAgentCompany or a similar benchmark might be expanded to include tasks from other industries or those that involve physical labor. Some tasks might be made purposefully vague, simulating situations where the goal is not immediately clear. And it might include more long-horizon tasks, such as conceptualizing a new product and carrying it to execution.

More information about TheAgentCompany, including the current leaderboard, is available on its website.

Source Article > https://www.cs.cmu.edu/news/2025/agent-company