Is Atlas About to Leap Past Industrial Piece-Picking Robots?

While humanoid robots like Boston Dynamics' latest iteration of Atlas have made impressive strides in mobility and dynamic movement, they have not yet entered the specialized domain of precision piece picking—an area where purpose-built robotic systems are already deployed and proven in industrial and logistics operations worldwide.

Today’s most advanced piece-picking industrial robots are not theoretical. They are actively tested, piloted, and operational across global warehouse environments.

However, the recent collaboration between Boston Dynamics and NVIDIA marks a pivotal moment. Their work in robotic dexterity and manipulation, especially in grasping, adaptability, and task execution within unstructured environments—signals the beginning of a potential paradigm shift. It suggests a future where humanoid platforms like Atlas could begin to replace or augment traditional piece-picking systems, offering greater mobility and flexibility than today’s stationary industrial robots.

Crucially, Atlas could also supplement or even replace human operators in physically demanding, repetitive warehouse tasks—particularly during night shifts and weekend operations, where labor shortages and higher operational costs remain persistent challenges.

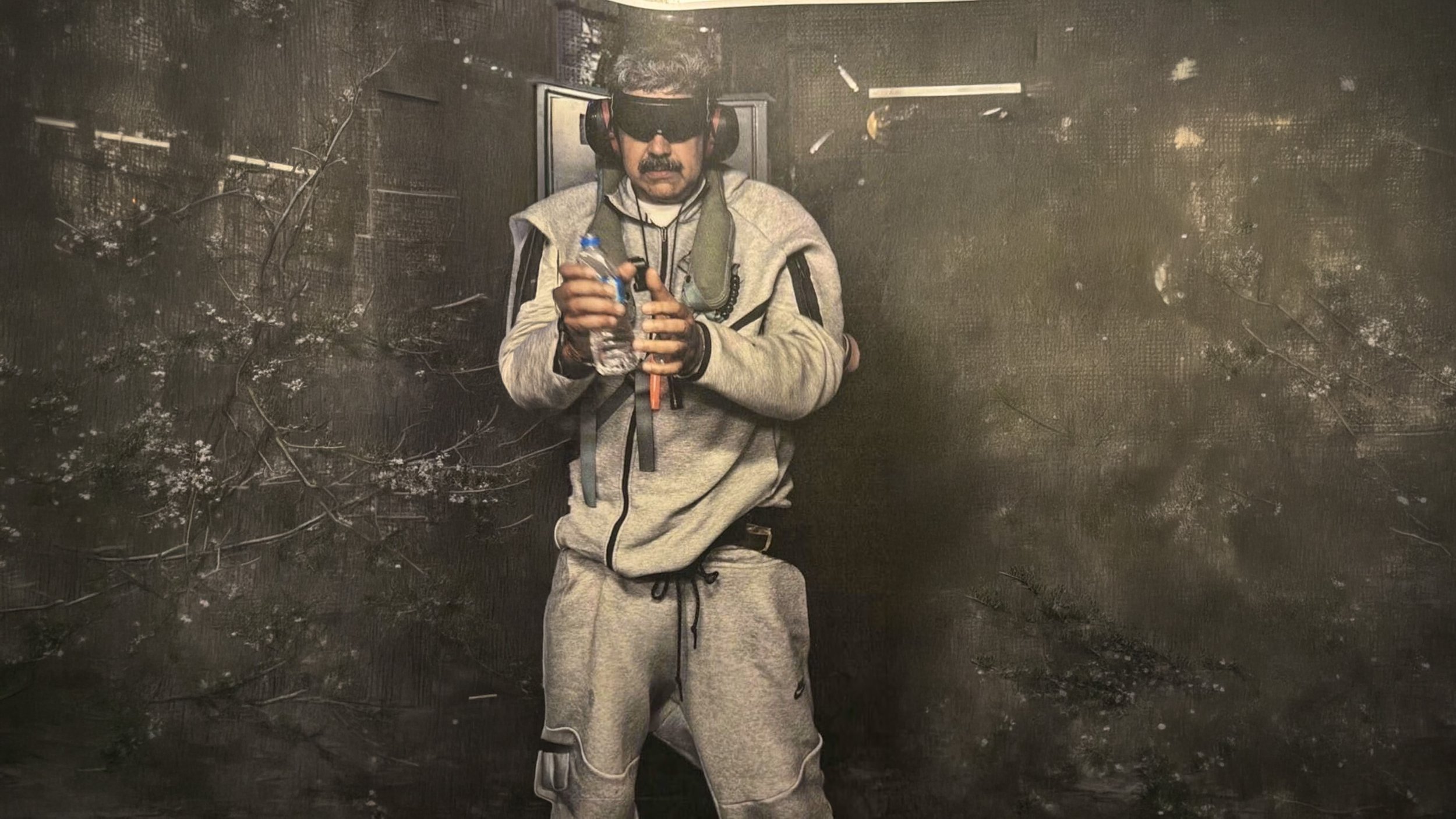

Video showcases the Boston Dynamics Atlas MTS robot successfully grasps industrial objects using DextrAH-RGB

Closing the Gap: NVIDIA and Boston Dynamics Advance Atlas Toward Industrial-Grade Manipulation

In the dynamic landscape of modern automation, the need for robotic systems that can adapt and react in unstructured environments is paramount. While robotic arms are commonplace for repetitive tasks like assembly and packaging, the increasing complexity of applications demands perceptive robots capable of making decisions based on real-time data. This adaptability fosters greater flexibility and enhances safety through hazard awareness.

This edition of the NVIDIA Robotics Research and Development Digest (R²D²) delves into several key workflows and AI models developed by NVIDIA Research, specifically addressing critical challenges in robot dexterity, manipulation, and grasping, particularly focusing on **adaptability and data scarcity**.

Understanding Robot Dexterity

At its core, robot dexterity involves manipulating objects with precision, adaptability, and efficiency. It encompasses fine motor control, coordination, and the ability to handle a wide range of tasks, often in environments that aren't perfectly controlled or predictable. Key elements include grip, manipulation, tactile sensitivity, agility, and coordination. This capability is increasingly vital in industries like manufacturing, healthcare, and logistics, enabling automation for tasks traditionally requiring human-level precision.

However, achieving dexterous grasping, especially with diverse objects or in dynamic settings, remains a significant challenge for traditional robotic methods. These often struggle with aspects like reflective surfaces and lack the ability to generalise effectively to new objects or changing conditions. NVIDIA Research is addressing these challenges by developing end-to-end foundation models and workflows designed to enable **robust manipulation across varied objects and environments**.

Key NVIDIA Workflows and Models for Dexterity**

This R²D² digest highlights three significant contributions:

DextrAH-RGB: A workflow for dexterous grasping using stereo RGB input.

DexMimicGen: A data generation pipeline designed for bimanual dexterous manipulation, leveraging imitation learning.

GraspGen: A large synthetic dataset of over 57 million grasps for different robots and grippers.

Let's explore these in more detail:

1. DextrAH-RGB: Dexterous Grasping from Stereo RGB

DextrAH-RGB is a workflow enabling **dexterous arm-hand grasping** using only stereo RGB camera input. A key feature is that policies are trained entirely in simulation and demonstrate the ability to generalize to novel objects when deployed**. The training is conducted at scale within NVIDIA Isaac Lab across various objects.

The training pipeline consists of two stages:

Stage 1: Teacher Policy Training: A privileged teacher policy is trained using reinforcement learning (RL) in simulation. This teacher employs a fabric-guided policy (FGP) operating in a geometric fabric action space. Geometric fabrics provide vectorized low-level control signals (position, velocity, acceleration) sent as commands to the robot's controller, facilitating rapid iteration by embedding safety and goal-reaching behaviours, including collision avoidance. The teacher policy incorporates an LSTM layer to reason about and adapt to world physics, enabling corrective behaviours like regrasping and understanding grasp success to react to current dynamics. Robustness and adaptability are enhanced through domain randomization, varying physics, visual, and perturbation parameters to increase environmental difficulty during training.

Stage 2: Student Policy Distillation: The teacher policy is then distilled into an RGB-based student policy in simulation using photorealistic tiled rendering. This process utilises an imitation learning framework called DAgger. The student policy takes stereo RGB images as input, allowing it to implicitly infer depth and object positions.

Collaboration with Boston Dynamics has demonstrated the simulation-to-real capabilities of DextrAH-RGB, deploying a generalist policy on the Atlas MTS robot's upper torso. This system, using Atlas's three-fingered grippers, has shown robust, zero-shot simulation-to-real grasping of industrial objects and exhibits emerging failure detection and retry behaviours.

2. DexMimicGen: Generating Data for Bimanual Dexterous Manipulation

Training bimanual dexterous robots, especially humanoids, often faces the challenge of **data scarcity** for imitation learning (IL). Manual data collection is a tedious task. DexMimicGen addresses this by providing a workflow for bimanual manipulation data generation that uses a small number of human demonstrations to produce large-scale trajectory datasets. The goal is to enable robots to learn actions in simulation that can then be transferred to the real world.

The workflow uses simulation-based augmentation:

1. A human demonstrator provides a limited number of demonstrations via teleoperation.

2. DexMimicGen generates a large dataset of demonstration trajectories in simulation; for instance, 60 human demos were expanded to 21K demos in one publication.

3. A policy is trained on this generated dataset using IL and deployed to a physical robot.

Bimanual manipulation is complex, requiring precise coordination. DexMimicGen accounts for this by classifying subtasks into a 'parallel, coordination, and sequential' taxonomy. This includes asynchronous execution for independent tasks, synchronization mechanisms for coordinated tasks, and ordering constraints for sequential tasks, ensuring precise alignment and logical task execution during data generation.

When deployed, models trained on data generated by DexMimicGen significantly outperformed models trained solely on human demonstrations, achieving a 90% success rate in a can sorting task compared to 0% success, highlighting its effectiveness in reducing human effort and enabling robust robot learning for complex manipulation.

3. GraspGen: A Large-Scale Synthetic Grasp Dataset

To support research and development in robot grasping, GraspGen offers a new simulated dataset comprising **57 million grasps on Hugging Face. The dataset includes 6D gripper transformations and success labels for various object meshes.

GraspGen features grasps for three different grippers:

The Franka Panda gripper

The Robotiq 2F-140 industrial gripper

A single-contact suction gripper

Crucially, GraspGen was generated entirely in simulation, showcasing the benefits of automatic data generation for scaling datasets in both size and diversity.

Conclusion

As robots move into more complex and less-structured environments, the need for adaptability driven by real-time data processing becomes essential. The workflows and models discussed – DextrAH-RGB, DexMimicGen, and GraspGen – represent significant steps from NVIDIA Research towards addressing key challenges in robot dexterity, manipulation, and grasping, particularly concerning adaptability and the often-limiting issue of data scarcity. These developments, rooted in simulation and advanced AI techniques, pave the way for more capable and flexible robotic systems in the automation domain.

These insights are part of the ongoing NVIDIA Robotics Research and Development Digest (R²D²), aiming to provide developers with deeper understanding of breakthroughs in physical AI and robotics applications.

Video 1. Training DextrAH-RGB in simulation

Figure 1. DextrAH-RGB training pipeline

Figure 2. Training the teacher policy for Atlas at scale using NVIDIA Isaac Lab

Figure 3. DexMimicGen workflow

Figure 4. Successfully sorting cans using a model trained on data generated with DexMimicGen

Figure 5. Proposed grasps for a range of different objects in the dataset

Figure 6. The coordinate frame convention for three grippers in the simulated GraspGen dataset: the Robotiq 2F-140 gripper (left), a single-contact suction gripper (center), and the Franka Panda gripper (right)

Original NVIDIA Developer Article > https://developer.nvidia.com/blog/r%C2%B2d%C2%B2-adapting-dexterous-robots-with-nvidia-research-workflows-and-models/