Amazon’s “Humanoid Park”: A Glimpse Into the Agentic Future of Delivery Robotics

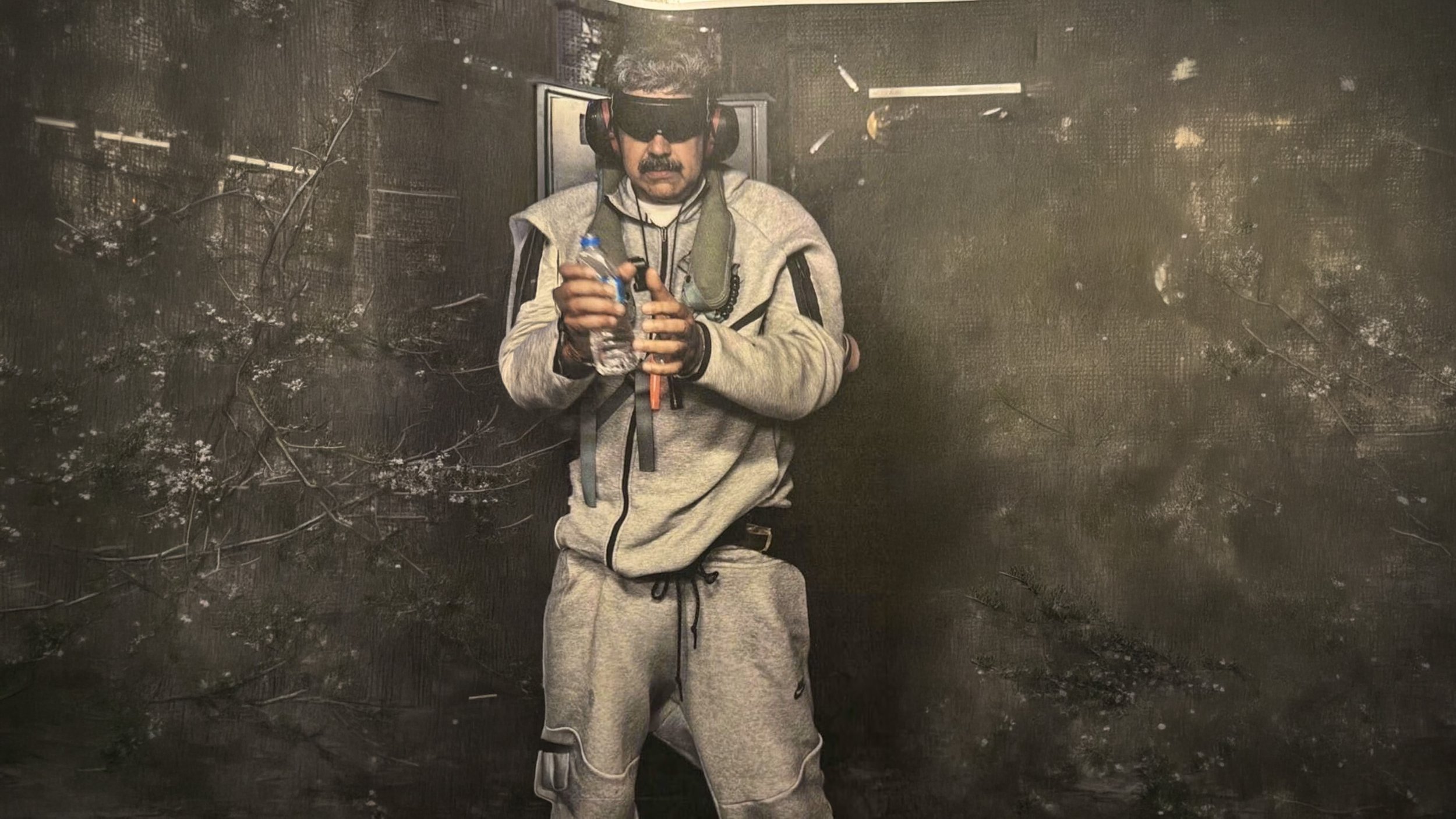

In a bold step toward agentic robotics, Amazon is quietly constructing what insiders call a “humanoid park” — a purpose-built indoor obstacle course designed to test the mobility and decision-making of humanoid robots in complex, real-world delivery scenarios. While the company has not publicly disclosed this initiative, reports indicate it’s unfolding at Amazon’s Lab126 facility in San Francisco — the same incubator responsible for Kindle, Echo, and the broader Alexa ecosystem.

This isn’t just another R&D sandbox. It’s a testbed for the convergence of agentic AI and dynamic robotics—where hardware and software fuse to reimagine the last mile.

From Task-Specific to Multi-Talented: Amazon's AI Pivot

Amazon’s robotic ambitions have long been shaped by task-specific systems — palletizing arms, shuttle systems, and Kiva-based mobile bots that execute repetitive, deterministic workflows. But now, the company is leaning into multi-function, adaptable systems powered by agentic AI — the next evolutionary leap.

Yesh Dattatreya, a lead scientist at Lab126, explained that Amazon is developing software agents capable of dynamically interpreting natural language commands, orchestrating multiple tasks, and adapting to shifting conditions. The goal? Flexible robotic assistants that can unload trailers, retrieve repair parts, and assist in variable pick-pack scenarios — all within the same operational loop.

Agentic AI is central to this transformation. These systems are designed not only to act on commands but also to reason, self-correct, and respond autonomously to obstacles — a fundamental capability in the chaotic reality of urban delivery.

Why Humanoids — and Why Now?

While the term “humanoid robot” may evoke images of science fiction, Amazon’s interest is deeply pragmatic. The physical environments where last-mile delivery happens — stairwells, doorways, apartment complexes, and irregular terrain — are poorly suited to wheeled platforms or rigid manipulators. A bipedal form factor, with arms for manipulation and legs for navigation, is still one of the most adaptable templates for navigating a human-built world.

However, Amazon isn’t building the robots — at least not yet. The current iteration of the project relies on third-party humanoid platforms, likely from startups such as Figure, Apptronik, or Agility Robotics. What Amazon is building is the brain: a scalable AI architecture capable of integrating vision, locomotion planning, natural language understanding, and real-time decision-making.

Logistics Meets Spatial Intelligence

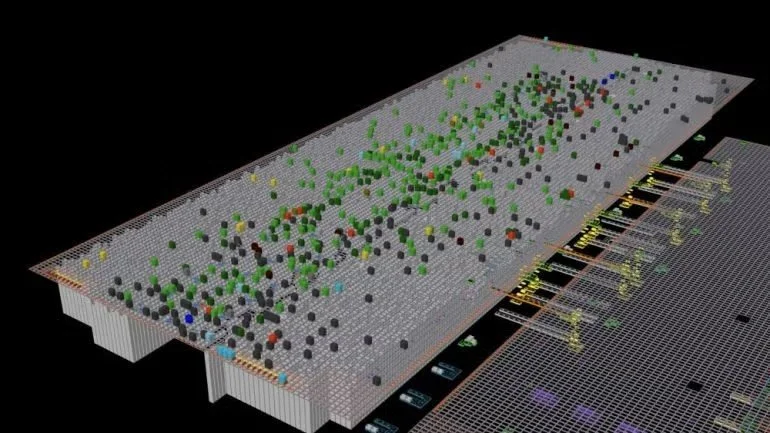

One major constraint in current delivery systems is navigation at the delivery endpoint — the so-called “last 50 feet.” Amazon is addressing this with an AI-powered geospatial engine that constructs detailed 3D maps of delivery environments. These maps capture not just coordinates, but architectural geometry, entry points, and even dynamic obstacles like construction zones or parked vehicles.

This spatial layer is already being used by delivery drivers across the U.S., especially in multi-unit dwellings and office parks. The company is even prototyping screen-embedded AR glasses that deliver turn-by-turn instructions — hands-free — from vehicle to doorstep.

In a future with humanoid delivery agents, this geospatial intelligence becomes critical. The humanoid must know not just where to go, but how to get there — whether that involves stairs, elevators, locked lobbies, or delivery lockers.

Beyond Delivery: Forecasting, Fulfillment, and the Invisible Hand of AI

Parallel to its robotic ambitions, Amazon is deploying AI across its supply chain to predict demand down to the SKU, ZIP code, and calendar day. It’s no longer just about replenishment — it’s about hyper-localized inventory placement and real-time fulfillment.

According to Nathan Smith, director of demand forecasting at Amazon, AI models now account for variables as granular as weather, local holidays, and regional preferences — enabling Amazon to “sell a different set of books in Boston than in Boise.” This AI-first mindset is being layered across every operational domain, from same-day logistics to carbon reduction.

The potential synthesis here is immense: AI-driven prediction models feeding demand signals to humanoid agents capable of executing physical delivery with unprecedented adaptability.

The Path Forward

While Amazon remains quiet on the long-term roadmap — how many robots, when, or even what they’ll look like — the direction is unmistakable. The humanoid park is not a gimmick. It’s a prototype of what logistics could look like when mechanical dexterity, spatial awareness, and language-enabled decision-making converge in one system.

For robotics experts, this raises critical questions:

Control architectures: Will agentic systems be hybrid (cloud + edge), or fully autonomous at the edge?

Safety and compliance: How will humanoid delivery agents interface with real people, pets, and unpredictable environments?

Interoperability: Will Amazon’s software stack be vendor-agnostic, or tightly coupled to specific hardware platforms?

We are witnessing the earliest iterations of embodied AI in logistics. Whether it’s humanoid couriers, agentic warehouse bots, or intelligent geospatial glasses, Amazon is staking its future on a deep integration of physical automation and cognitive autonomy.

The humanoid park may be a sandbox today, but it’s laying tracks for a full-scale reimagining of what it means to move goods in the modern world.

Author's Note:

If you work in warehouse automation, AI orchestration, or robotics engineering, let’s talk. As this new era unfolds, partnerships between software developers, AI researchers, and hardware OEMs will be the engine driving what’s next.