OpenAI's Eve Humanoids Show Promising Progress

"The video contains no teleoperation," says Norwegian humanoid robot maker 1X. "No computer graphics, no cuts, no video speedups, no scripted trajectory playback. It's all controlled via neural networks, all autonomous, all 1X speed."

This is the humanoid manufacturer that OpenAI put its chips behind last year, as part of a US$25-million Series A funding round. A subsequent $100-million Series B showed how much sway OpenAI's attention is worth – as well as the overall excitement around general-purpose humanoid robot workers, a concept that's always seemed far off in the future, but that's gone absolutely thermonuclear in the last two years.

1X's humanoids look oddly undergunned next to what, say, Tesla, Figure, Sanctuary or Agility are working on. The Eve humanoid doesn't even have feet at this point, or dextrous humanoid hands. It rolls about on a pair of powered wheels, balancing on a third little castor wheel at the back, and its hands are rudimentary claws. It looks like it's dressed for a spot of luge, and has a dinky, blinky LED smiley face that gives the impression it's going to start asking for food and cuddles like a Tamagotchi.

1X does have a bipedal version called Neo in the works, which also has nicely articulated-looking hands – but perhaps these bits aren't super important in these early frontier days of general-purpose robots. The vast majority of early use cases would appear to go like this: "pick that thing up, and put it over there" – you hardly need piano-capable fingers to do that. And the main place they'll be deployed is in flat, concrete-floored warehouses and factories, where they probably won't need to walk up stairs or step over anything.

What's more, plenty of groups have solved bipedal walking and beautiful hand hardware. That's not the main hurdle. The main hurdle is getting these machines to learn tasks quickly and then go and execute them autonomously, like Toyota is doing with desk-mounted robot arms. When the Figure 01 "figured" out how to work a coffee machine by itself, it was a big deal. When Tesla's Optimus folded a shirt on video, and it turned out to be under the control of a human teleoperator, it was far less impressive.

In that context, check out this video from 1X.

The above tasks aren't massively complex or sexy; there's no shirt-folding or coffee machine operating. But there's a whole stack of complete-looking robots, doing a whole stack of picking things up and putting things down. They grab 'em from ankle height and waist height. They stick 'em in boxes, bins and trays. They pick up toys off the floor and tidy 'em away.

They also open doors for themselves, and pop over to charging stations and plug themselves in, using what looks like a needlessly complex squatting maneuver to get the plug in down near their ankles.

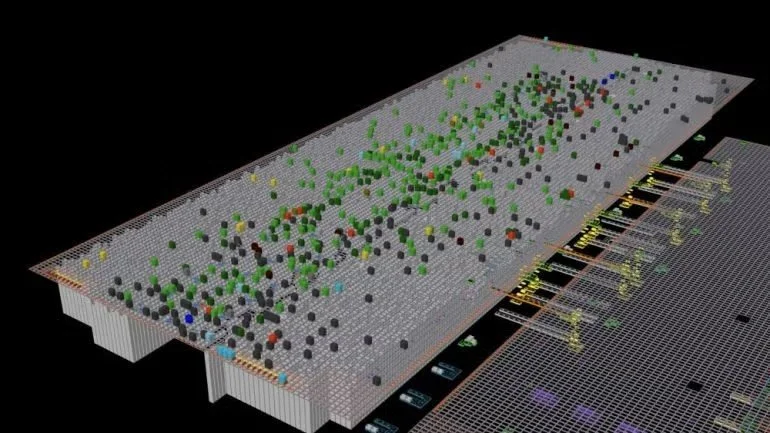

In short, these jiggers are doing pretty much exactly what they need to do in early general-purpose humanoid use cases, trained, according to 1X, "purely end-to-end from data." Essentially, the company trained 30 Eve bots on a number of individual tasks each, apparently using imitation learning via video and teleoperation. Then, they used these learned behaviors to train a "base model" capable of a broad set of actions and behaviors. That base model was then fine-tuned toward environment-specific capabilities – warehouse tasks, general door manipulation, etc – and then finally trained the bots on the specific jobs they had to do.

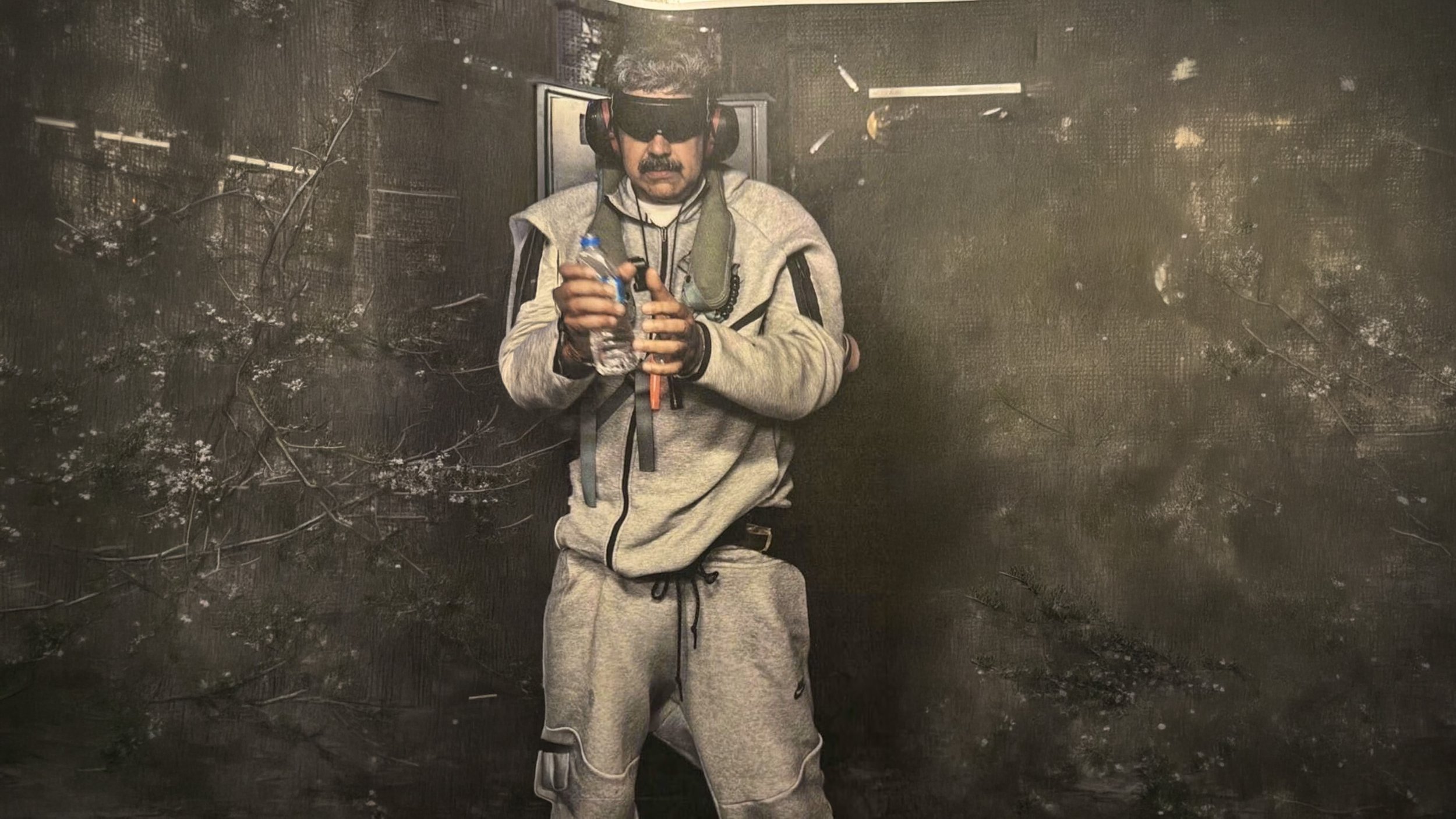

This last step is presumably the one that'll happen on site at customer locations as the bots are given their daily tasks, and 1X says it takes "just a few minutes of data collection and training on a desktop GPU." Presumably, in an ideal world, this'll mean somebody stands there in a VR helmet and does the job for a bit, and then deep learning software will marry that task up with the bot's key abilities, run it through a few thousand times in simulation to test various random factors and outcomes, and then the bots will be good to go.

"Over the last year," writes Eric Jang, 1X's VP of AI, in a blog post, "we’ve built out a data engine for solving general-purpose mobile manipulation tasks in a completely end-to-end manner. We’ve convinced ourselves that it works, so now we're hiring AI researchers in the SF Bay Area to scale it up to 10x as many robots and teleoperators."

Pretty neat stuff, we wonder when these things will be ready for prime time.

Source: 1X